A user places a request on a Web3 platform and an autonomous digital agent executes a series of complex cross-chain operations. Liquidity pools are scanned, routes are compared, best rates are secured, and the transaction is finalized – all in just a few seconds. So, there is no struggle with multiple apps or endless approvals – The process feels invisible. The perfect dream of agentic AI realised!

Yet, an important question remains! With autonomy comes the imperative of accountability.

The issue is no longer how autonomous intelligence will reshape Web3 interactions, but how platforms master the calibration. While the industry fixates on building more AI-driven systems, the actual competitive advantage lies in developing intelligence that knows its own boundaries. When should agents be trusted to act, and when should humans step in?

The answer rests on “human edge”: embedding oversight as a feature in the overall architecture of autonomous systems. Here, we will explore how autonomy and oversight can be balanced, the patterns that devise human-in-the-loop workflows, and the related areas of concern.

Understanding The Autonomy Paradox

Agentic AI is the marker of the new paradigm: agents as co-workers, not just tools. And just like in any partnership, the challenge is knowing when to delegate and when to take back the control. Current AI agents are able to achieve 30-50% accuracy in complex web and associated tasks, with even advanced models scoring below human-level performance. Yet the idea of full automation requires platforms to deploy agents with greater authority than reliability warrants.

In Web3, this can pose critical risks. While traditional software failures imply only frustrated users, Web3 failures mean irreversible transactions and financial losses.

No doubt, organizations still remain unsure of handing full autonomy to systems, without human oversight, particularly where this can lead to compliance issues and brand damage. This forms the central paradox of Web3: the complexity that makes agents necessary is the same complexity that makes absolute autonomy risky. Users need intelligent assistance because complicated protocols overwhelm cognitive capacity. But those same users need to hold control over high-stakes financial decisions as the consequences are irreversible.

The most sophisticated agentic AI ecosystems aren’t designed for maximizing autonomy, but calibrating it. The ideal ones seek permission at tipping points, in order to understand the context more deeply.

Criteria For Calibrated Agentic AI in Web3

Agentic workflows need to be built for ‘adaptive control’ – an infrastructure where systems fluidly shift between autonomy and human guidance, depending on context and confidence. Every action an agent performs can be perceived through five key lenses that govern what should be prioritized – autonomy or oversight.

- Gravity of Impact: The more serious the potential consequence, the stronger the case for human verification. For example, approving an on-chain treasury allocation calls for oversight; running routine rebalancing cycles may not.

- Reversibility: If an action can’t be easily undone, such as submitting token burns or governance votes, it warrants a ‘pause point’.

- Uncertainty: When agents operate outside the trained conditions or come across new data patterns, confidence scores should incite escalation.

- Explainability: If an agent can’t clearly articulate its reasoning or output in human-readable form, oversight is mandatory.

- Regulatory and Ethical Considerations: Compliance-sensitive environments need explicit human checkpoints to assure accountability.

Defining Decision Boundaries for Agentic AI

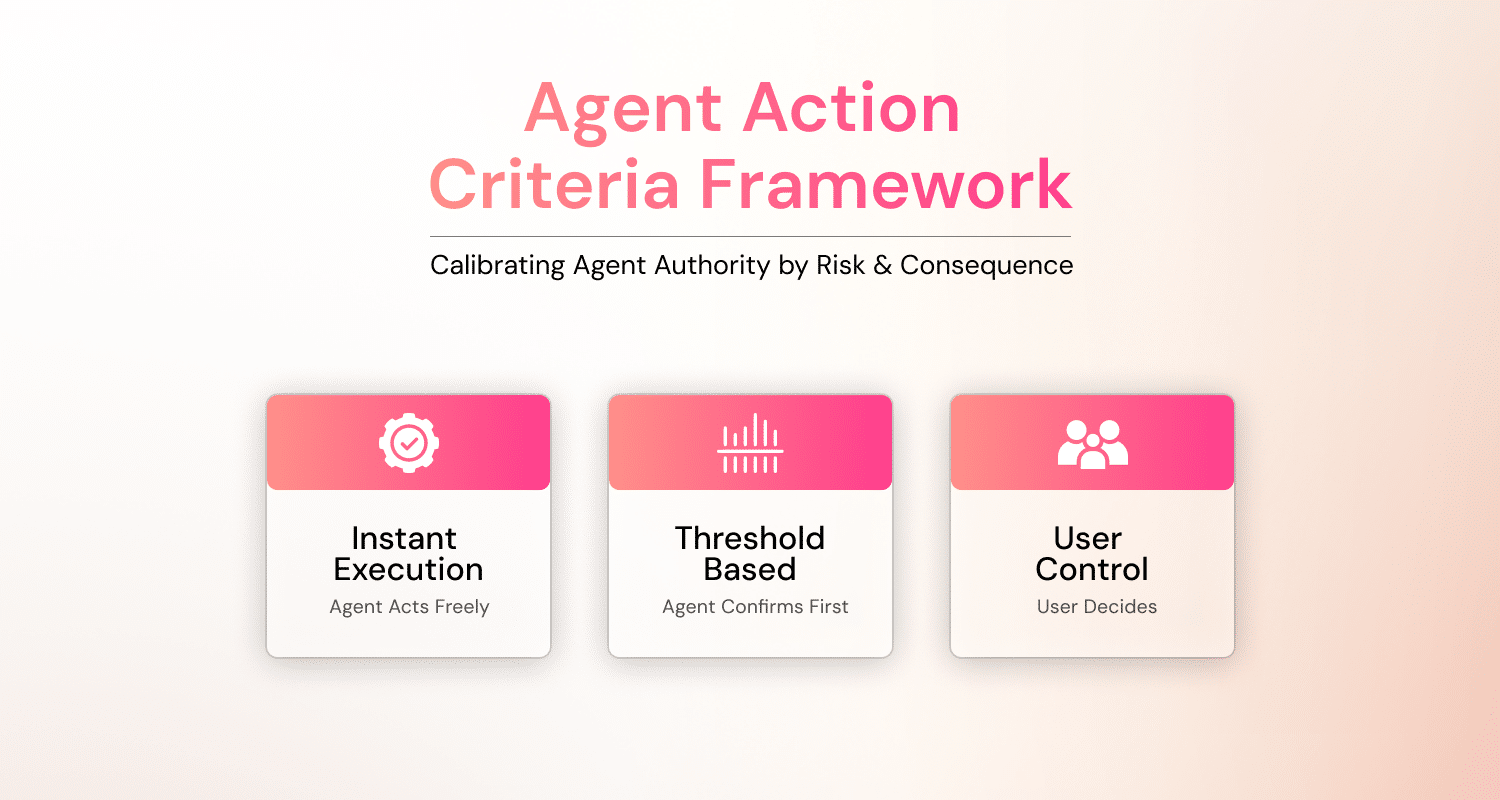

The more autonomous the system becomes, the more essential human oversight is. Three things determine whether an agent action should execute everything automatically, pause for confirmation, or ask for human decision: What’s reversible? What’s at stake? What’s the user’s established level of trust?

Balancing autonomy and oversight demands intentional design. Not every decision needs a human gatekeeper, and not every process should run unchecked. The key is to architect flexible human-in-the-loop patterns for agentic AI in Web3: mechanisms that decide when agents should act independently and when humans step in.

Explained below are three broad frameworks drawn from current industry practice and evolving research:

Users don’t trust systems that never ask permission. They trust systems that ask the right questions at the right moments.

The decision boundary between these zones can shift based on accumulated trust, demonstrated competence, and evolving user preferences. But the core principle of the framework stands intact: low-consequence actions execute fully autonomously, medium-consequence actions evoke conditional review, and high-consequence actions entail explicit approval.

How Abstraxn Instruments Autonomous Intelligence

Abstraxn’s agentic AI network implements calibration as part of core architecture. Each agent operates within the authority designed for its domain – information flows freely, transactions pause at thresholds, security decisions stay with the users, and so on.

For instance, our informational agents perform with almost full autonomy. When users ask about vault performance, protocol mechanics, or platform features, responses are delivered instantly. There is no confirmation friction for such queries. Further, our transactional agents calibrate as the thresholds platforms configure and users evolve. They execute routine swaps within established ranges automatically, but surface confirmations for operations that exceed limits, cross new protocols, or deviate from regular patterns.

Abstraxn’s framework provides agentic intelligence with safety guardrails. Platforms retain control over where agents execute and where users decide.

Final Word

Autonomy shouldn’t be seen as the end of human involvement, but the evolution of it. The aim of Agentic AI isn’t to remove humans from the loop, but to redefine their role in the loop. In this new era of intelligence, humans don’t have to micromanage everything, but state the conditions, thresholds, and ethics under which agents operate.

It would be right to say “The human-centered future of autonomy will be determined by the right decisions of when to let go and when to stay in the loop.”

The next competitive leap won’t come from how autonomous your systems are, but how responsibly autonomous they can be.